Jonathan Ebsworth (TechDotPeople) & Roger Bickerstaff (Bird & Bird)

Introduction

On the evening of January 16th, 2020 a small group gathered at the offices of international law firm Bird & Bird in London for a “Roundtable” discussion to explore and debate the responsible use of artificial intelligence (AI).

The key aim of the evening was to explore the range of issues that need to be considered when organisations develop a responsible approach to AI – both in the private and public sectors, supply side and by users of technology.

Attendees

The attendees came from a wide range of organisations, including telecoms providers, software suppliers, financial services, IT consultancies, the public sector, the law and politics. A number of the attendees were legally qualified and practising lawyers.

All of the attendees provided their personal viewpoints. They were not company representatives.

Practical Implementation Recommendations

A number of practical implementation recommendations can be distilled from the discussions:

- Create a consensus in the organisation on the principles that the organisation will adopt in its usage of AI.

- Build a culture throughout the organisation supporting, nurturing and promoting the responsible use of AI – probably as an aspect of wider corporate social responsibility.

- Ensure that the C-suite understands, adopts and takes a leadership role in the responsible use of AI – senior stakeholder leadership will be crucial in successful adoption.

- Proudly promote a responsible approach to the use of AI as a marketing differentiator – be clear about the organisation’s approach and use it to win business.

- Consider the need for a legal framework to support – and potentially mandate – the responsible use of AI.

- Continuously review and feedback on the organisation’s implementation of AI to monitor the approach to adoption in practice and to make improvements to the processes.

Setting the Scene – Telia’s Story (Eglė Gudelytė Harvey)

In order to provide context for the evening’s discussions, Eglė Gudelytė Harvey (Head of Legal, Telia, Sweden) provided a summary of Telia’s “journey” to develop a responsible approach to the use of artificial intelligence.

Telia was founded in 1853 and recently has had to evolve and develop as rapidly as the rest of the world around it. In 2015 Telia set out to define what it means to be a new generation Telco. AI was at the heart of the new Telia – and the decision was taken to pull together a cross-functional/disciplinary team to ensure a holistic approach to balancing both risks and opportunities offered by AI.

Telia knew they were on a journey, to help achieve their goals, they pulled together six threads of activity:

- Principles for Trusted AI

- Guidance and Assessment

- AI Center of Excellence

- AI Sustainability Center

- Future Skills

- Piloting EU Guidelines

Principles for Trusted AI

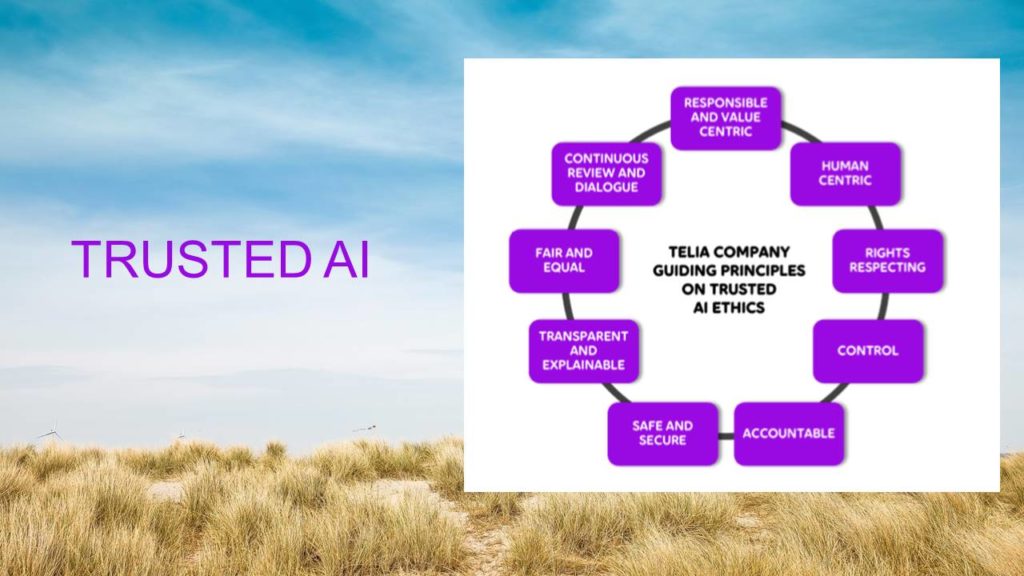

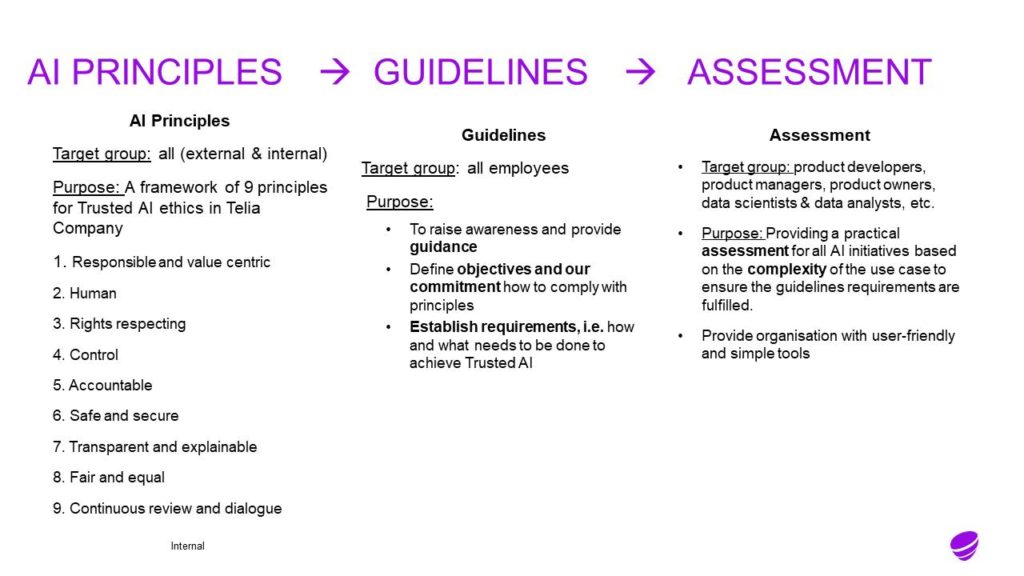

Telia developed nine guiding AI principles:

- Human-Centric

- Rights Respecting

- Control

- Accountable

- Safe and Secure

- Transparent and Explainable

- Fair and Equal

- Continuous Review and Dialogue

- Responsible and Value Centric

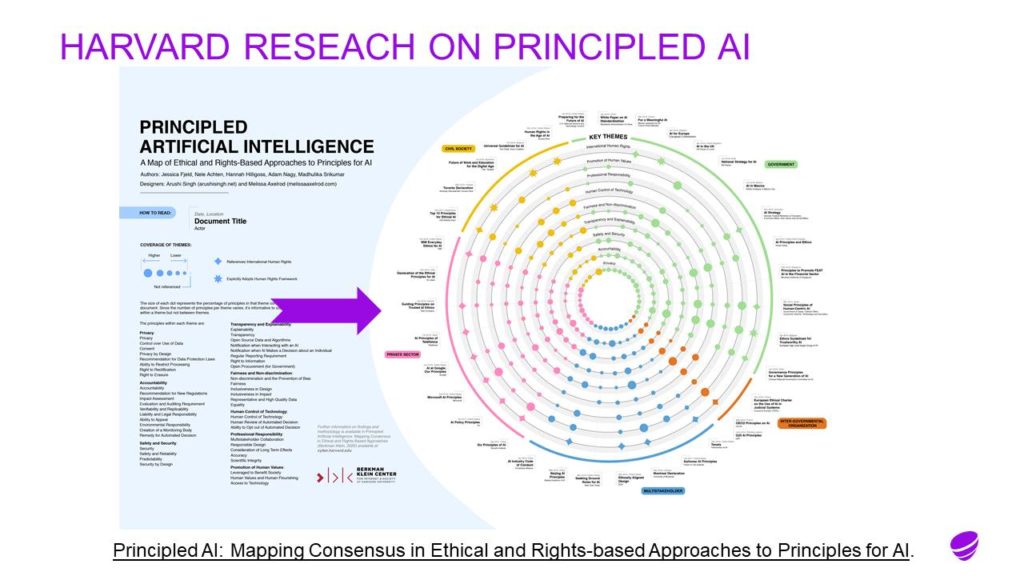

Eglė pointed to a very recent Berkman Kelin analysis of about 35 major sets of AI principles frameworks all of which follow a very similar structure.

- Privacy [1]

- Accountability

- Safety and Security

- Transparency and Explainability

- Fairness and Non-discrimination

- Human Control

- Professional Responsibility

- Promotion of Human Values

- International Human Rights

There may be a plethora of AI frameworks with varying emphases, but they are headed in similar directions. The approach Telia took as entirely consistent with the mainstream approach to AI Ethics.

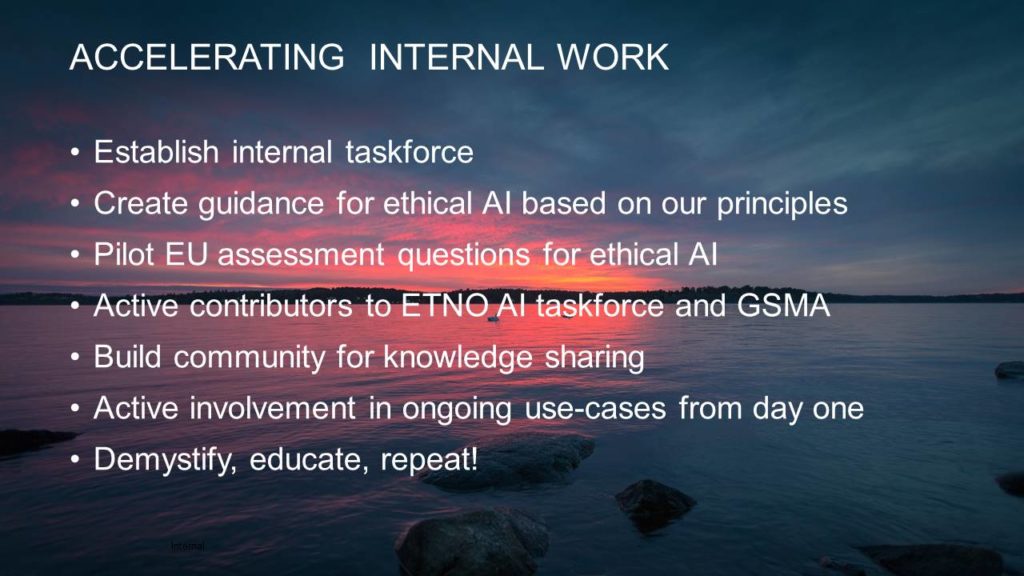

Accelerating the Journey

Setting out on an AI journey can be difficult. It is hardest of all to know where to start. The Telia advice is not to overthink the starting point; and find a use case that can deliver value and begin there.

There were some very practical steps taken to try to accelerate the journey. At the heart of these was a sustained activity to demystify and educate everyone involved. Involve as many people as possible in the journey and ensure that a community was established to enable to knowledge to be shared widely.

As the company went on the AI journey, guidance was enhanced to bring greater clarity to the principles that underpinned the Telia approach.

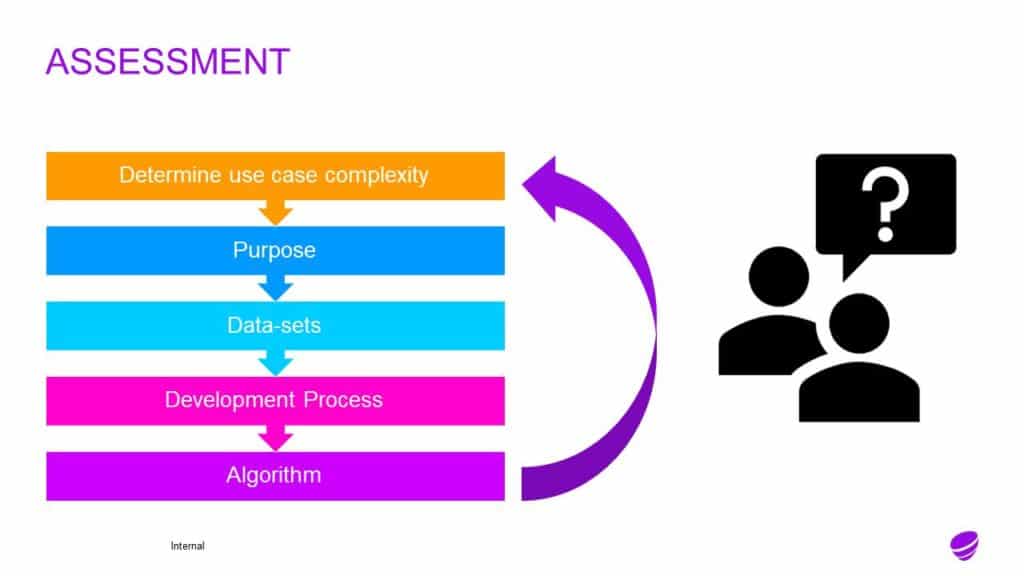

Guidance and Assessment

The purpose and focus of principles, guidelines and assessment were clearly spelt out and sought to ensure consistency of value from everyone involved, whether directly or indirectly: employed by Telia or by a partner organisation.

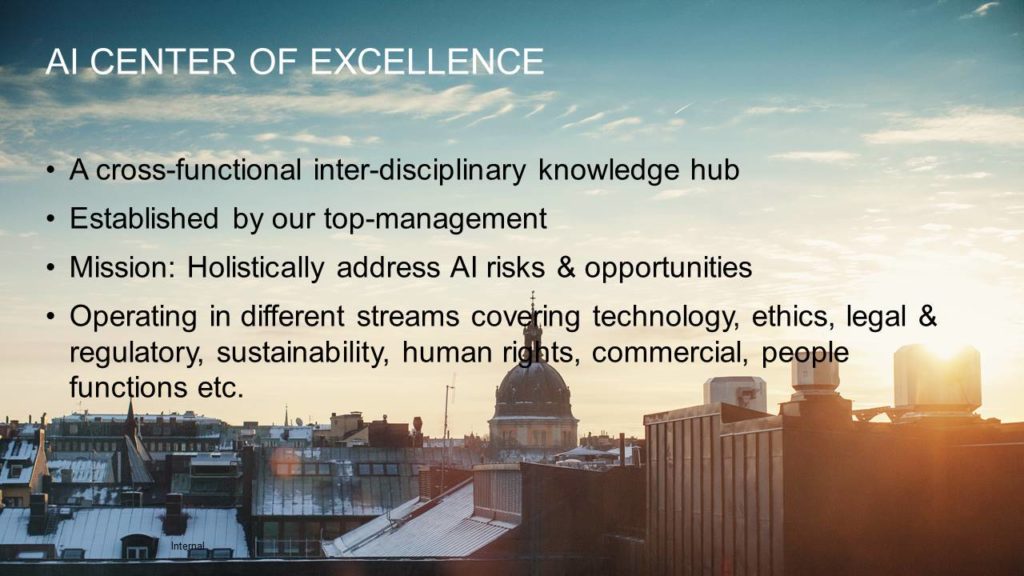

AI Center of Excellence

A critical part of the Telia AI delivery capability was the AI Center of Excellence. It had top-level executive sponsorship and a clear mission: to ensure an holistic approach to address AI risks and opportunities. It was felt that in order to apply the AI principles effectively – the relevant skills needed to be brought together to address both intended and unintended consequences of AI solutions.

AI Sustainability Center

The AI Sustainability Center was established in 2018 to create a world-leading multidisciplinary hub to address the scaling of AI in broader ethical and societal contexts. Telia were founder member of this, along with Atomico, Bonnier, Cirio, and Microsoft. Commercial interests have been blended with academic insights coming from KTH, Karolinska Institutet, Lund, Chalmers, Umeå, and Linköping universities as well as from public agencies like Skatteverket, the Swedish tax authority, the Swedish Public Employment Service, the City of Stockholm, and the City of Malmö. The intention is to unite around a common belief that it is better to navigate the AI journey together rather than alone. The goal is to be leaders in setting frameworks that put humanity at the core of AI solutions.

Members of the AI Sustainability Center have also worked together on joint projects (see over).

The Telia Approach and Lessons Learned

The key lessons from the Telia journey are summarised in this final slide.

There was a brief discussion on the experience of piloting the EU HLEG Trustworthy AI Assessment List. The Telia experience suggested it was rather heavyweight – but comprehensive.

Reactions and Discussion

Following this presentation, there was a general discussion which explored some of the central issues of AI solutions, focussing primarily on the current and emerging “narrow” AI technologies which are either available now or over the next few years.

There was deliberately little discussion of the implications of any forms of ‘super intelligence’.

Developing a Culture for Responsible AI Usage:

- AI Ethics by Design (as part of Corporate Social Responsibility (CSR)): One way the participants felt, that businesses might begin to take these issues seriously, in a systematic way, would be for AI Ethics to become part of more general CSR principles and procedures. While we may face some challenges in giving this equal priority to the current important emphasis on the environment – this might provide an effective way to raise the significance of these issues in the minds of business leaders.

- Demystify and Engage: the desperate need to raise awareness across business of all these struck a loud chord with the participants – along with the potential challenges of bringing these capabilities to life in people’s minds. The concepts of Responsible AI need to be promoted at all levels within the organisation. The messaging and presentation will be different for the C-Suite as compared to (for example) junior operational personnel but there needs to be consistency and coherency with an overall set of Responsible AI principles.

- C-Level Understanding: In general, business (and political) leaders have a less than adequate understanding of the significance of the issues raised by AI Ethics. Senior stakeholder understanding and endorsement is crucial to the development of a responsible approach to AI.

- Wider Promotion: for private sector companies there is need to develop strategies so that Responsible AI Usage become a competitive market-place advantage. Otherwise, private sector businesses will be under pressures to adopt “cut-throat” approaches to AI to the detriment of more responsible approaches to AI usage. There may a role for a legal framework to give a foundation in this area.

AI Ethics as a compliance activity?

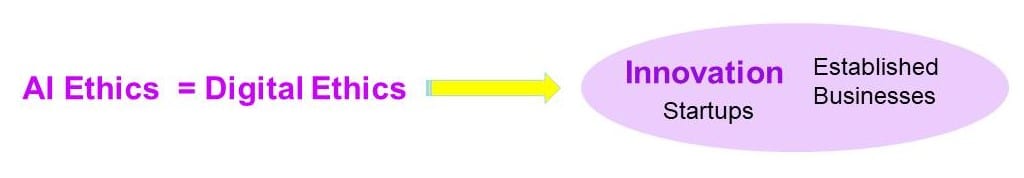

Jonathan Ebsworth (TechDot People) introduced discussion around whether AI Ethics can or should be treated as a compliance activity. AI Ethics gets a lot of attention – it is only one (potent) part of a wider digital revolution. Established businesses have more to lose by getting it wrong than startups do.

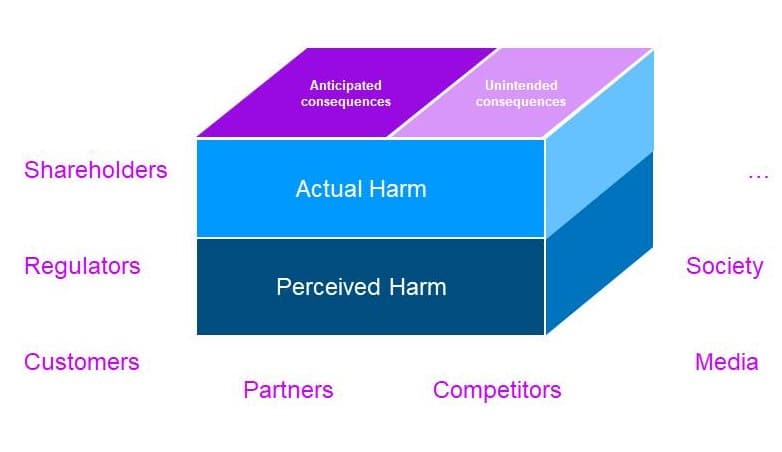

When tackling Digital Ethics we must consider a very wide range of perspectives. It is important to realise that it is not only actual harm that matters – but the perception of harm is as important. Our reputation is at stake. It is relatively easy to assess the intended consequences – we are equally responsible for the unintended consequences of innovation. Tools like doteveryone’s consequence scanning approach could help.

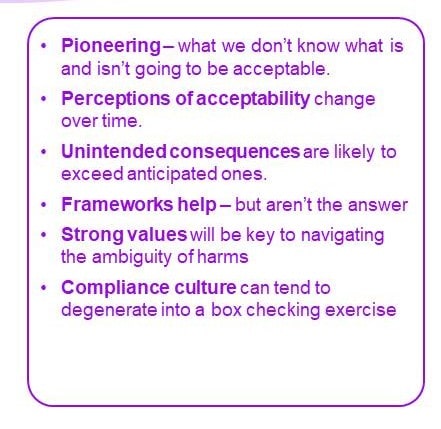

Finally, we need to remember we are pioneering – we can’t always be confident of what will or won’t be acceptable. Perceptions change over time. Something we tolerate today will be intolerable tomorrow – remember single-use plastic bags. Unintended consequences are likely to be the greatest danger. Frameworks will help provide structure for our thinking but they won’t be a guarantee. What is needed are very clear values that underpin our businesses. Values that are lived by our business leaders, rewarded in our business operations and subscribed to by our teams. If digital ethics is treated as a compliance activity, then it will likely degenerate into a box-checking exercise – ultimately an ethics avoidance task, rather than a vibrant part of testing our new ideas to make sure they enhance our business, rather than destroying it.

Accountability

- Human Accountability: There was strong support for the principle of human accountability in all circumstances for system-determined decisions/recommendations. Context was seen as critical – and the level of human involvement in the decision-making process seen as having a strong connection with nature of the decision being made. We recognise that possibly some machine-made recommendations were already being made inappropriately.

- Implicit anthropomorphism: the tendency for interactive AI solutions to manifest in pseudo-human form (e.g. Alexa, Siri, chatbots) in ways that do not announce themselves as non-human. AI is architecturally incapable of empathy – we as humans instinctively respond to anything that behaves in a human-like manner as though it were also human. This was seen as a potentially serious issue that is not being addressed well at the moment. We discussed whether machine-based interactions need to explicitly identify themselves; and possibly remind us of their non-human nature. [This is sometimes referred to as a ‘Turing Red Flag’[2])

- Explainable AI: In deterministic use cases AI can be explainable: stochastic AI (non-deterministic) currently is not. Will Explainable AI (XAI) help here – and how much can it do already? This question was not answered clearly in the room – though the feeling was that many forms of Machine Learning could be traced and potentially reproduced: recommendations made by systems based on deep learning are almost impossible to explain or trace in a meaningful way[3].

Training Data Sets and Algorithms:

There was a general view in the room was that training data sets were as significant as the algorithms themselves. The two together represented an inseparable pair, which may be in need of some sort of legal protection.

Legal Framework

Eglė Gudelytė Harvey commented on the agenda of the EU Commission and, in particular, Ursula von der Leyen, the EU President who is intending to rapidly introduce proposals for a legal framework for AI. It now seems that in the first place the proposals are likely to take the form of a white paper on the issues rather than specific legislative proposals.

The need for a general legal framework to reinforce and underpin the adoption of a responsible approach to the use of AI was questioned. Even the lawyers in attendance were not in agreement on whether an underpinning legal framework would be beneficial. There was considerable scepticism over whether legal obligation in this field would promote a negative “tick-box” compliance culture.

There was more consensus amongst the lawyers on the difficulties faced in providing strong IP protection for AI. The underlying AI algorithms may be excluded from patent protection and many AI algorithms are not at all novel and may date back many decades. The transparency obligations in patent protection could be some form of model for transparency obligations for AI solutions but it was not felt that there would be strong parallels.

Conclusions

A concern was raised as to whether the present ‘fascination’ with facial recognition technology in the context of AI debates was more of an expression of fashion than helping to protect personal privacy. Lord Reid suggested that that battle was already largely lost – and that we would do better to fight to defend human autonomy than sustain a doomed rear-guard action. Lord Reid challenged the whole group to see if we could sustain the conversation begun this evening and turn it into some specific action.

We intend to send out some further thoughts to start this discussion in the coming days, but we feel the key opportunities exist in:

- Raising awareness – C-level, Regulators, Politicians and the community as a whole;

- Bridging the gap between guidelines and practical implementation;

- How to ensure that ethical values reach through from contracting business to its subcontractors who may be building or operating the pioneering solution;

- Exploring how we might defend human autonomy.

Powerpoint Presentation

The roundtable presentation can be downloaded in its entirety here:

Attendees

| Mark Deniston | Brevan Howard |

| Mike Gawthorne | Serocor |

| Egle Gudelytė Harvey | Telia |

| Jen Kitson | JDA |

| David Levinger | ISRS |

| Anna O’Brien | HSBC |

| Lord John Reid | ISRS |

| Richard Seeley | Glowfori |

| Howard Rubin | Bird & Bird |

| Katharine Stephens | Bird & Bird |

| Roger Bickerstaff | Bird & Bird |

| Jonathan Ebsworth | Techdotpeople |

[1] http://wilkins.law.harvard.edu/misc/PrincipledAI_FinalGraphic.jpg

[2] https://cacm.acm.org/magazines/2016/7/204019-turings-red-flag/abstract

[3] https://royalsociety.org/-/media/policy/projects/explainable-ai/AI-and-interpretability-policy-briefing.pdf