1. Background

The need for an ethical approach to the implementation of AI solutions has gained widespread acceptance in academic communities, the public sector and, increasingly, in commercial organisations. A considerable industry has emerged providing commentary and guidance on the topic. A recent Harvard study has reviewed 36 different sets of AI ethical principles and discovered a number of thematic trends.[1]

There is less guidance on an appropriate legal framework to regulate the implementation of AI solutions. The Council of Europe recognises the need for legislation and will propose a strategic agenda which will include AI regulation as one of the major challenges needed in order to find a fair balance between the benefits of technological progress and the protection of fundamental values. However, this will take time – a deadline of 2028 has been set for the process.

In our initial note in this series on A Light Touch Regulatory Framework for AI [2] we argued for the need for a ‘light-touch’ regulatory framework for technology solutions using AI, with a primary focus on transparency obligations which would be dependent on the human impact of the AI solution. We argued that the key components of the Light Touch Framework for AI should be organized around a transparency requirement relating to registrable (but not necessarily approved) AI solutions – with the assessment of the required level of transparency focusing on two elements:

- technical assessment – a quality, rational and sensibility assessment of the AI solution, including the data sets used; and

- human importance assessment – relating to the impact on the fundamental rights of individuals and the importance to the state.

Both the technical and the human importance assessments should be carried out by the organisations developing the AI solutions. These assessments should then be subject to public scrutiny pursuant to the transparency requirements. The transparency requirement will give interested individuals and third parties an opportunity to review the AI solutions prior to and during the deployment and operation of AI solutions. Apart from for the most invasive AI solutions, where some form of approval process may well be necessary, citizen and third party review is a more modern alternative to regulatory approvals processes which can be slow and bureaucratic.

We will examine the transparency requirements in more detail in a later article in this series. This article focuses on our proposed approach to the classification of AI solutions based on the technical quality (Technical Assessment – TA) and the human importance (Human Importance Assessment – HIA) of an AI solution in the context of the positioning within the spectrum of a required level of transparency. We propose an AI Index (Ai2) which relates to both the TA and the HIA.

2. Technical assessment (TA): Quality, rational and sensibility assessment of AI solutions

The goal of the assessment of the quality, rationality and sensibility of AI solutions is to encourage the developers and system integrators of AI solutions to assess the overall quality of their AI algorithms, data and their quality assurance (QA) processes.

The technical assessment should not overburden product development and should not require the disclosure of differentiating IP. The technical assessment framework for AI solutions should take into consideration and utilise concepts used in the assessment of highly critical and sensitive products like aircraft and nuclear power plants and apply these concepts to non-critical applications, for example AI powered facial recognition system in a smartphone or recommender system used for online shopping. The technical assessment should consist of three elements as follows:

Quality: Quality needs to be assessed for the data sets – if any – used by AI and the AI algorithm itself:

Quality of the data – Data quality includes using data sets that cover data samples from all possible inputs to account for biases in the AI solution. Standard data sets – for example ImageNet [3] – are widely used, curated and can be given a higher score compared to data sets developed in-house, either open sourced or proprietary. However, even standard data sets like ImageNet can have defects that may result in bias [4] and flaws. Care should be taken that take into consideration both the data set source as well as testing of the data set in the AI solution to come up with the data quality score.

Quality of the AI algorithm – the assessment of the quality of the AI algorithm includes testing the algorithm for boundary scenarios and failure analysis. There is a direct correlation between the quality of the AI algorithm and the quality of the AI solution. Quality assurance is a well understood and adapted process that is composed of unit testing, integration/system testing, performance, regression and security testing. Depending on the complexity and level of integration of the AI algorithm with the rest of the AI solution, a quality score can be provided either for the AI algorithm or for the overall AI solution. Complexity and integration levels are covered in the next section.

Failure analysis is common across AI solutions that are critical in nature and where faulty behavior can be catastrophic. These include but are not limited to aircraft, nuclear power plants and defense related technologies and solutions. Analysis includes Fault Tree Analysis (FTA), Failure Mode and Effects Analysis (FMEA), Common Cause Analysis (CCA) as defined in ARP-4761. A score can be provided if such failure analysis has been performed.

Rationality: The rationality assessment includes the developer attesting that the AI algorithms — without disclosing the algorithms if it is not open sourced — used have gone through a product design cycle where the algorithms being used have been vetted by product managers and development team with sign-off from management. The following issues should be considered:

The complexity of the AI solution

- Highly integrated vs. loosely integrated AI solution

- Open source vs. closed sourced nature of AI algorithm

- The similarity to existing algorithms

- Type of AI algorithm – is the algorithm supervised, unsupervised learning, reinforcement learning or transfer learning. A higher score can be provided to supervised learning algorithm solutions as these will be easier to assess for design flaws. Unsupervised learning algorithms need a better understanding of the boundary conditions and algorithm design as their behavior changes over time as they encounter new data after deployment in production. Unsupervised learning algorithms are inherently riskier if not designed well.

- Due process – An AI solution that has gone through the full and well documented product development life cycle will have a higher score.

Sensibility: The sensibility assessment relates to a “common sense” review of whether AI is actually needed or whether an alternative approach would be better suited for the solution. The goal for the sensibility score is to encourage developers to plan, assess and deploy after making an internal review of the AI solution. An experienced developer should be capable of making the decision and coming up with a score. For example, an AI solution using an unsupervised learning algorithm where the product is a learning system that can change behaviour in flight will still get a high score provided its use is preferred and justified.

3. Human Importance Assessment (HIA)

The second pillar of the assessment of AI solutions relates to their potential impact in terms of the fundamental rights of individuals or the importance to the state.

Individual Rights: Clearly, AI solutions that will have a significant impact on individuals should have a greater level of scrutiny than those which solely facilitate business processes. AI solutions that are intended to have a significant impact on individuals include AI-based facial recognition solutions, particularly those that are used to support police operations. Clearly, AI solutions that provide inputs into police activities that may impact on the security and liberty of individuals need careful attention. These solutions contrast with AI solutions in many business environments. For example, the use of AI in legal due diligence for mergers and acquisitions and electronic disclosure in civil litigation processes are less likely to have a direct human impact.

A framework is needed in order to assess the impact on individuals. This area is inevitably subjective and dependent on societal values but the United Nations approach to the fundamental rights of individuals is a balanced starting point to this form of assessment. The UN Universal Declaration of Human Rights provides that

Everyone is entitled to all the rights and freedoms set forth in the Declaration, without distinction of any kind, such as race, colour, sex, language, religion, political or other opinion, national or social origin, property, birth or other status. Furthermore, no distinction shall be made on the basis of the political, jurisdictional or international status of the country or territory to which a person belongs, whether it be independent, trust, non-self-governing or under any other limitation of sovereignty.

Of course, the UN principles are high-level and very general. In contrast to the Technical Assessment processes, some of which are already in the industry, this form of human impact assessment is a new form of assessment. Most, organizations that develop AI solutions will need assistance and guidance in order to make this assessment. However, the increasing focus on and understanding of data ethics that may well provide resources to assist organisations in carrying out these assessments.

Importance to the State: The need for, and the appropriateness of, state regulation in the context of AI solutions has been stated succinctly by Paul Nemitz (one of the key initiators of developments in European privacy laws). He has said:

“which of the challenges of AI can be safely and with good conscience left to ethics, and which challenges of AI need to be addressed by rules which are enforceable and based on democratic process, thus laws. In answering this question, responsible politics will have to consider the principle of essentiality which has guided legislation in constitutional democracies for so long. This principle prescribes that any matter which is essential because it either concerns fundamental rights of individuals or is important to the state must be dealt with by a parliamentary, democratically legitimized law”.[5]

Of course, there is an inevitable ebb and flow in the extent of state intervention and regulation based on the interplay between economic circumstances and politics. The COVID-19 crisis has highlighted the role of the state, even in countries that have attempted to downplay state intervention, with governments aiming to provide a more active role with more extensive safety nets, faster economic growth and greater social protection, whilst controlling (and sometime reducing) public expenditure.

Dilemmas arise from the state’s role in the use of AI stemming from the need to balance the potential efficiency gains and advances from the use of AI, with the need for the state to provide protections for its citizens. Beyond this, state intervention can have many and various rationales and objectives, including the allocation of limited resources, the redistribution of wealth, etc. However, as AI is not a limited resource, there appears to be limited need for state intervention and regulation on these grounds.

4. Relationship of Technical and Human Importance Assessment – the AI Index

The AI index provides a relationship between the Technical Assessment (TA) and the Human Importance Assessment (HIA). In this approach both HIA and TA can each be allocated values between 0 and 100. The AI Index (Ai2) is then defined as the ratio between HIA and TA. Our recommendation is that the AI index score should be registered with a central public registry.

In general terms, a high Technical Assessment score means that a product that is of high quality, and includes AI that is properly designed. This should lead to a reduced transparency requirement as a higher Technical Assessment score will balance out a higher Human Importance Assessment score. Some examples where this would be true would be AI solutions in critical environments, for example power grids, nuclear power plants and defence systems. These solutions typically follow a significant level of engineering scrutiny, are built by experienced software, hardware development professionals and will typically have high Technical Assessment scores. In terms of transparency, a high Technical Assessment score combined with a low Human Importance Assessment score would indicate that the AI solution would not be subject to a high degree of transparency.

As well as the Human Importance (HIA) to Technical quality (TA) ratio, there should be a higher transparency requirement for AI solutions with a higher level of Human Importance.

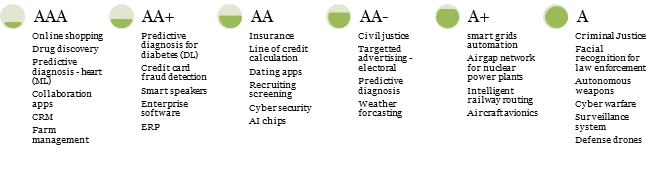

Whilst the thresholds need to be calibrated these general principles can be demonstrated as follows:

| AI Index (Ai2) | HIA/TA | HIA | Requirements |

| AAA | 0< HIA/TA ≤ 1 | and HIA ≤50 | No transparency |

| AA+ | 1< HIA/TA ≤ 2 | Notification only | |

| AA | 50 < HIA ≤75 | Notification only | |

| AA- | 2< HIA/TA ≤ 3 | Notification and Human Impact Assessment | |

| A+ | 75 < HIA ≤ 100 | Notification and Human Impact Assessment | |

| A | HIA/TA > 3 | Notification, Human Impact Assessment and Prior Approval |

The Royal Society Policy Brief on Explainable AI [6] makes the case for the interpretability of AI. It highlights the future need for a ranking system to differentiate areas where AI is deployed that may have varying degree of impact and the need for building accountability in AI based systems. Our proposed AI Index combined with a self-regulatory framework provides the basis for a solution to these two needs.

Some examples of how the AI Index rating could operate for some AI solutions are set out below. These are merely indicative. In practice, these ratings will vary based on actual values of HIA and TA. They are provided as examples based on expected values of HIA, the human importance level, and TA, the AI maturity for these products.

- Advertising: AI used in targeted advertising on social media or other websites will typically fall under AAA or AA- based on both the quality of the algorithm and related software used (TA) as well as the particular use case that will determine its human importance (HIA). Recommendation on deals and items you may like to buy on Amazon.com or Facebook or suggested friends on Instagram have a smaller HIA compared to showing targeted advertisements by candidates for national elections. Both of these may have a similar TA. We expect former to be rated AAA and latter to be AA-.

- Healthcare: Some of the AI solutions in healthcare include predictive diagnosis of heart diseases, analyzing medical records, robotics based surgery and drug discovery. The AI Index for these healthcare will vary. AI solutions used in drug discovery can have a rating of AAA while AI solution used for predictive diagnosis can be an AAA or an AA+ based on TA that includes the quality of the AI solution and the type of AI algorithm used. Deep Learning (DL) based predictive diagnosis for diabetes using retina imaging dataset should have a rating of AA+ due to the need somewhat higher HIA. A heart disease diagnosis that uses simpler machine learning (ML) algorithm analyzing medical records could have an Ai2 of AAA due to a more deterministic outcome through use of medical records instead of images for diagnosis.

- Judiciary: AI used in criminal justice will fall under the AI Index (Ai2) rating of A due to the heightened need for transparency due to high HIA. Civil justice systems, for example use of AI for civil dispute resolution, autonomous traffic violation enforcement system, are likely to have an AI Index of AA-.

- Insurance: AI solutions can be used for personalized policy/premium rates using AI solutions and detecting fraud. These type of solution involve both personal data from an individual policy holder and aggregate data for predictions. These can have an Ai2 rating of AA.

- Finance: Some examples of AI solutions in financial industry can include automated calculation of line of credit, credit card fraud detection and loan risk calculation based on background information including salary, zip code and individual background history. As we have seen from the recent news [7] about Apple Card from Apple with Goldman Sachs as the issuer bank, even if a solution – either AI based or otherwise — is built to perform without bias by not considering inputs related to, for example gender, either the underlying dataset or the algorithm used can be biased, making the solution biased, resulting in unforeseen consequences – providing smaller line of credit for Apple Card to women compared to men. Because of the large scale consequences of such issues, we expect that financial institutions that provide AI solutions will ensure a high TA but at the same time will have a moderately high HIA number due to the wide scale impact, resulting in an Ai2 of AA+ or AA.

- Critical Infrastructure: AI solutions can include systems for more efficient maintenance and operation of smart grids, nuclear power plants, railways, aerospace and roadways. These usually have a high TA and HIA score. For example a system to maintain an airgap network within a nuclear power plant or avionics system for an aircraft. These should have an Ai2 of A+.

- Defense: Autonomous weapons, cyber warfare, surveillance systems and drones. These system are of high importance to the state and the citizen alike (HIA) and should be build of high technical quality (TA). These system also entails a high level of transparency, which is what is expected from recommended Ai2 rating of A for such system.

We will cover the transparency requirements in the next article. Nevertheless as a guideline, the transparency requirement and the disclosures entailed should not require the disclosure of underlying IP or to enable a third part to replicate the solution.

Figure 1. AI Index ratings with examples

5. Summing up

The AI Index approach to the classification of AI solutions provides a useful assessment of the likely impact of these solutions, taking into account the technical quality and the human importance of the solution. Both of these elements need to be considered when determining the overall impact of an AI solution. At the most basic level of assessment, AI solutions with a low AI Index rating, either through a high human importance aspect or a low technical rating, should be subject to more scrutiny that those with a high AI Index rating.

This approach to the classification of AI solutions is important in the context of the transparency obligations relating to AI solutions and the proposed legal framework around these transparency obligations which we will explore in the next article in this series.

Roger Bickerstaff and Aditya Mohan

About the authors:

Roger Bickerstaff – is a partner at Bird & Bird LLP in London and San Francisco and Honorary Professor in Law at Nottingham University. Bird & Bird LLP is an international law firm specializing in Tech and digital transformation.

Aditya Mohan – is a founder at Skive it, Inc. in London and San Francisco. He has research experience from Intel Research, IBM Research Zurich, MIT Media Labs and HP Labs. He studied at Brown University and IIT. San Francisco’s Skive it, Inc. with additional registered office in the United Kingdom is a Deep Learning company building autonomous machines that can feel.

[1] See – https://dash.harvard.edu/bitstream/handle/1/42160420/HLS%20White%20Paper%20Final_v3.pdf?sequence=1&isAllowed=y

[2] https://digitalbusiness.law/2019/11/a-light-touch-regulatory-framework-for-ai/

[4] https://www.wired.com/story/viral-app-labels-you-isnt-what-you-think/

[5] “Constitutional Democracy and Technology in the Age of Artificial Intelligence”, https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3234336

[6] https://royalsociety.org/-/media/policy/projects/explainable-ai/AI-and-interpretability-policy-briefing.pdf

[7] The Apple Card Didn’t ‘See’ Gender—and That’s the Problem. https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/